5-Minute DevOps: Pain-Driven Development

Development is hard. It's harder when there is pain in your development workflow. If it hurts, do it more.

"Build quality in" is very important to me and all of my projects are configured to run my tests automatically when I commit a change to version control. If the tests pass, then the commit is accepted. If the tests fail, the commit is rejected. We should always look for small ways to make it harder to make mistakes, after all. This allows me to make small changes with confidence and celebrate every commit instead of crossing my fingers and waiting for the tests to run on the CI server.

My workflow looks like this:

- Make a small change to the code and tests

- Attempt to commit my changes

- Wait while the tests run (I hate waiting)

- Joy or sadness after the change is accepted or rejected.

Step 3 is a critical step. Not only do I need to trust those tests, but I need those tests to run quickly to keep flowing small changes to the trunk.

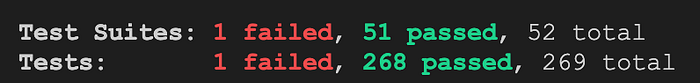

So, I make my change, try to commit it, and…

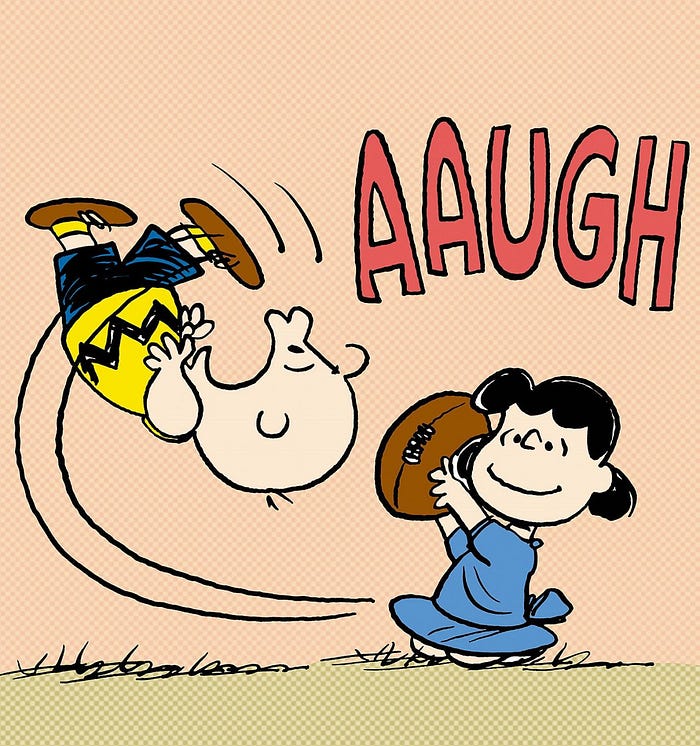

Gah!! That was just working!!

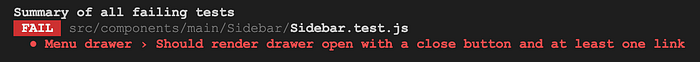

Since I trust my tests, I know this is probably my fault. So, using my tests to track down the problem I find:

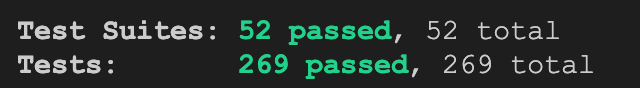

OK, I know what I did there. Should be easy enough to fix. A few minutes later…

Nirvana achieved again.

The speed of that test suite is important. If my tests take too long to run, I'll be discouraged from making very small changes. I may batch up hours of work before I run my tests because it's too painful to stare at the screen and wait. Did I mention I hate waiting? So when I get a failure after hours of making changes, it's likely to be several problems and it may take me a long time to identify what I broke. Fixing that may have cascading effects on later changes I made as well.

Also, if the tests take too long I'll lose focus and flow. I'll run the tests, grab some coffee, chat with my wife about whatever challenges she's having with some code of hers, maybe grab a snack that I don't need, or otherwise amuse myself while waiting for the tests to run. When they finish, I need to remember where I left off and try to get back the flow. Context switching is a major time thief.

The test framework my team is using will run all of the test files in as many parallel threads as my machine can handle. This means we can get fast feedback and keep the flow of development going, as long as we trust our tests. Here we had a problem; flaky tests.

Recently we had to stop running our tests in parallel because, for no obvious reason, about 40% of the time one or more of our tests would fail. Re-running the tests with no changes would usually make them pass. This happened running locally and on the CI server. That's bad. If our pre-commit testing passed the pipeline might still break. It was maddening. We had a difficult time replicating it, but we discovered that if we forced the tests to run sequentially we did not have the issue. So, we set them to run in sequence while trying to understand what was happening. That sucked. Suddenly, tests that would take around a minute to run now took more than three minutes. Staring at the screen for 3 minutes waiting to see if my tests pass sucks. Finally, I'd had enough of the pain.

I dug in, and I kept running the tests until I could see what the failure pattern was. Apparently, a watched test is not flaky. Eventually, after enough test runs, I found a pattern with two of our tests that were flaky, but for no obvious reason. There was no state coupling them to other tests and they weren't timing out. I even started digging into how the test framework executed tests to find other possible reasons the tests could be interfering with each other. After days of getting nowhere, I finally found some hints. The tests appeared to have a race condition. The tests were expecting a UI element to exist but sometimes the test checked for it before it was created. Why this happened only when running the tests in parallel was still unclear, but this was a possible cause. So, after restructuring the test slightly to eliminate that possibility, I ran the tests in parallel and they passed.

I didn't celebrate. Of course, they passed. They always passed about 60% of the time and a watched test isn't flaky.

I'd lost trust in my tests and I needed to rebuild that trust. So, the next step was to stress test the tests. I wrote a shell script to run the test suite 50 times and went to lunch.

Coming back from lunch, I had 50 clean test runs. I'd rebuilt some lost trust. I was reasonably confident I'd found the issue and also annoyed I couldn't explain why it only happened in parallel test runs. However, our tests ran 3 times faster and our pain was reduced. There's more we can do to improve, but there always is. In the meantime, it's easier to remain in the flow of development and we've decreased the average level of unhappiness.

Continuously integrating changes is a more humane development workflow, but it requires that we treat tests as first-class citizens. They can't be an afterthought. They require thoughtful architecture to be fast and reliable. Never skimp.